What Makes Genie 3 a Breakthrough?

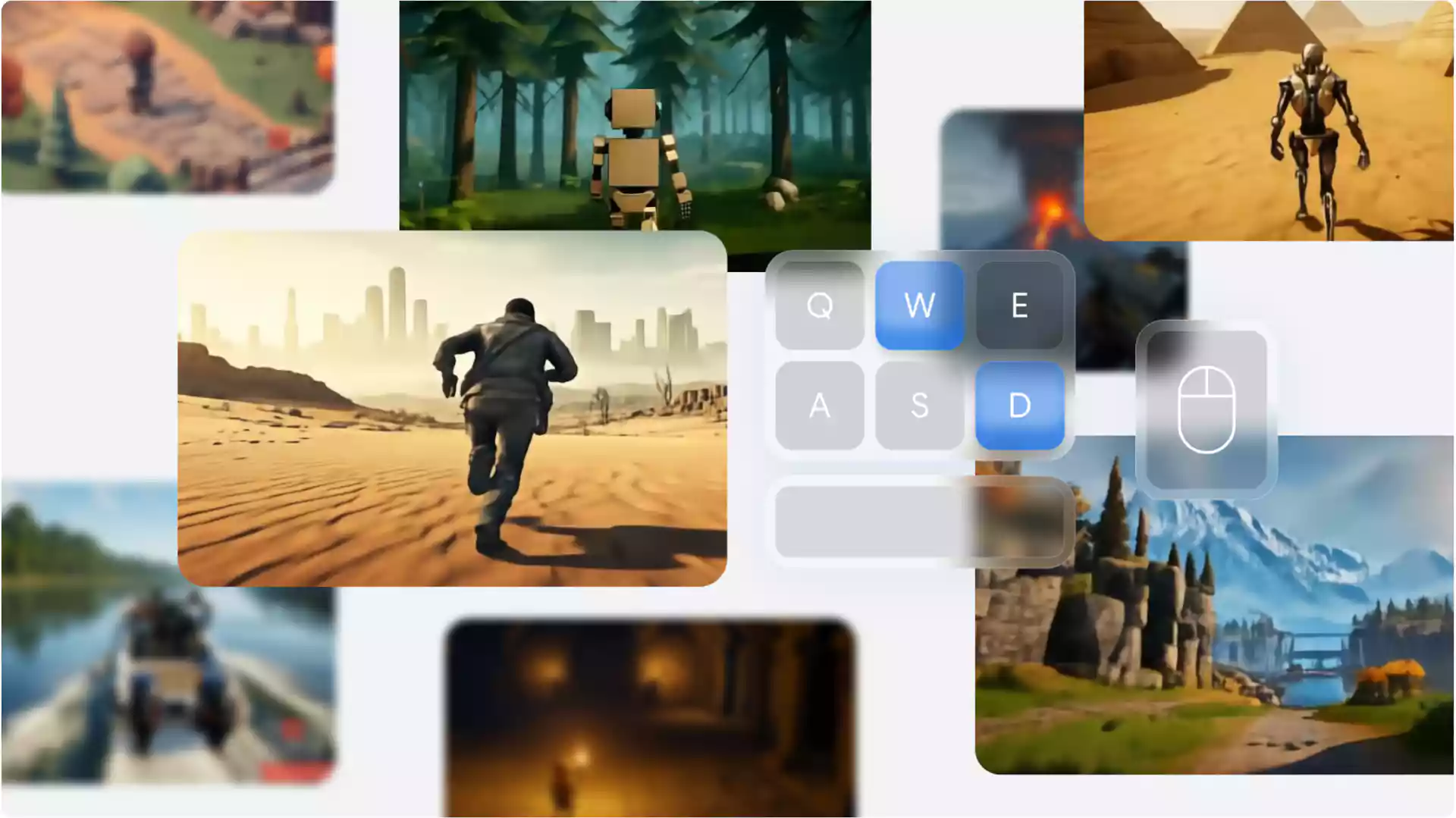

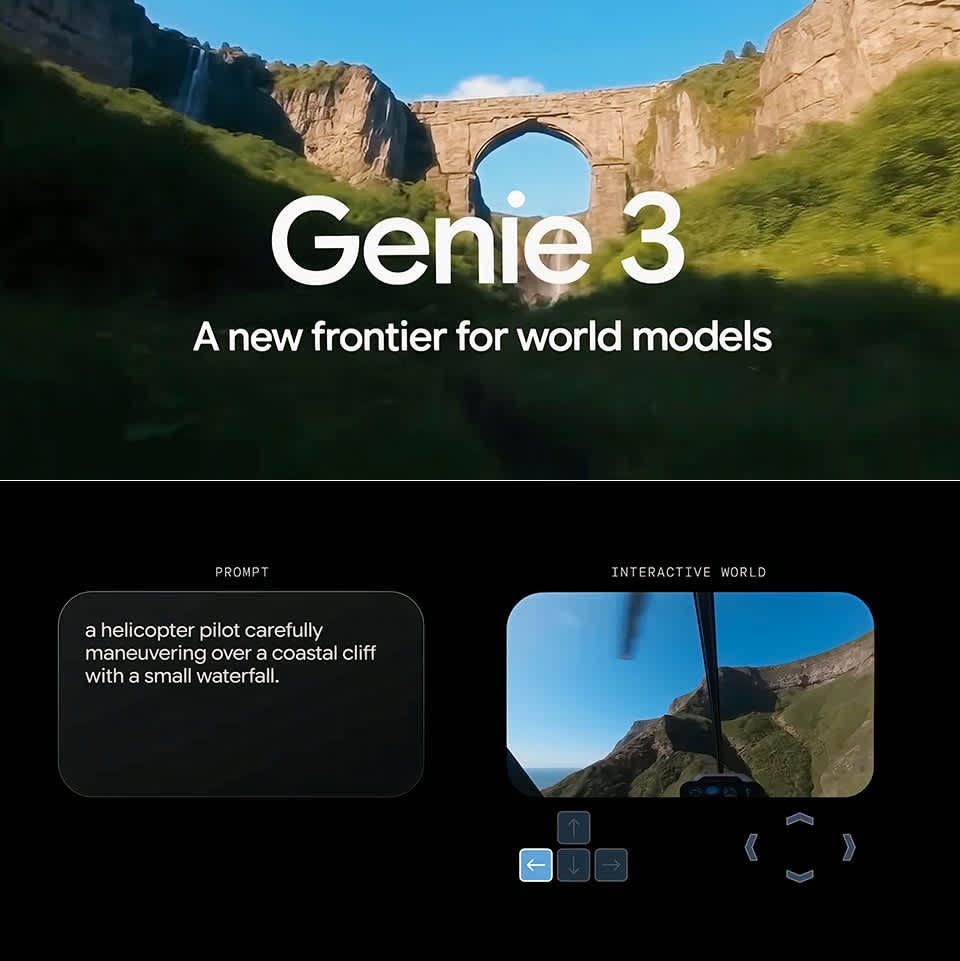

Open a text box, type “snow-covered village at sunrise,” and watch a living scene stream to your screen—complete with fluttering banners, crackling chimneys, and footprints that stay where you left them. Genie 3 is Google DeepMind’s most advanced world model to date, capable of generating coherent, navigable environments on the fly. Far more than a fancy video generator, it can be steered in real time, letting users rewrite physics or inject new objects without restarting the simulation.

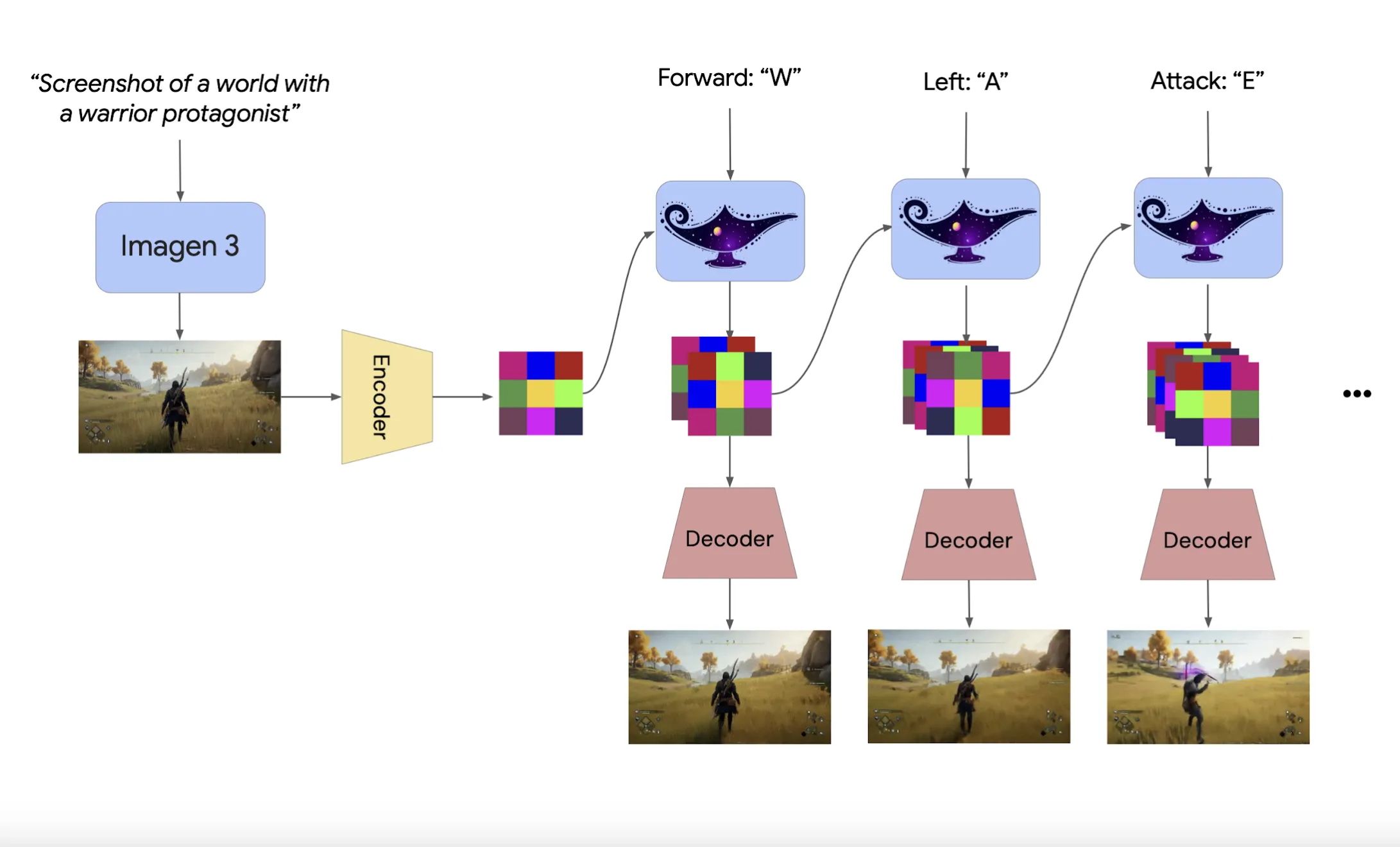

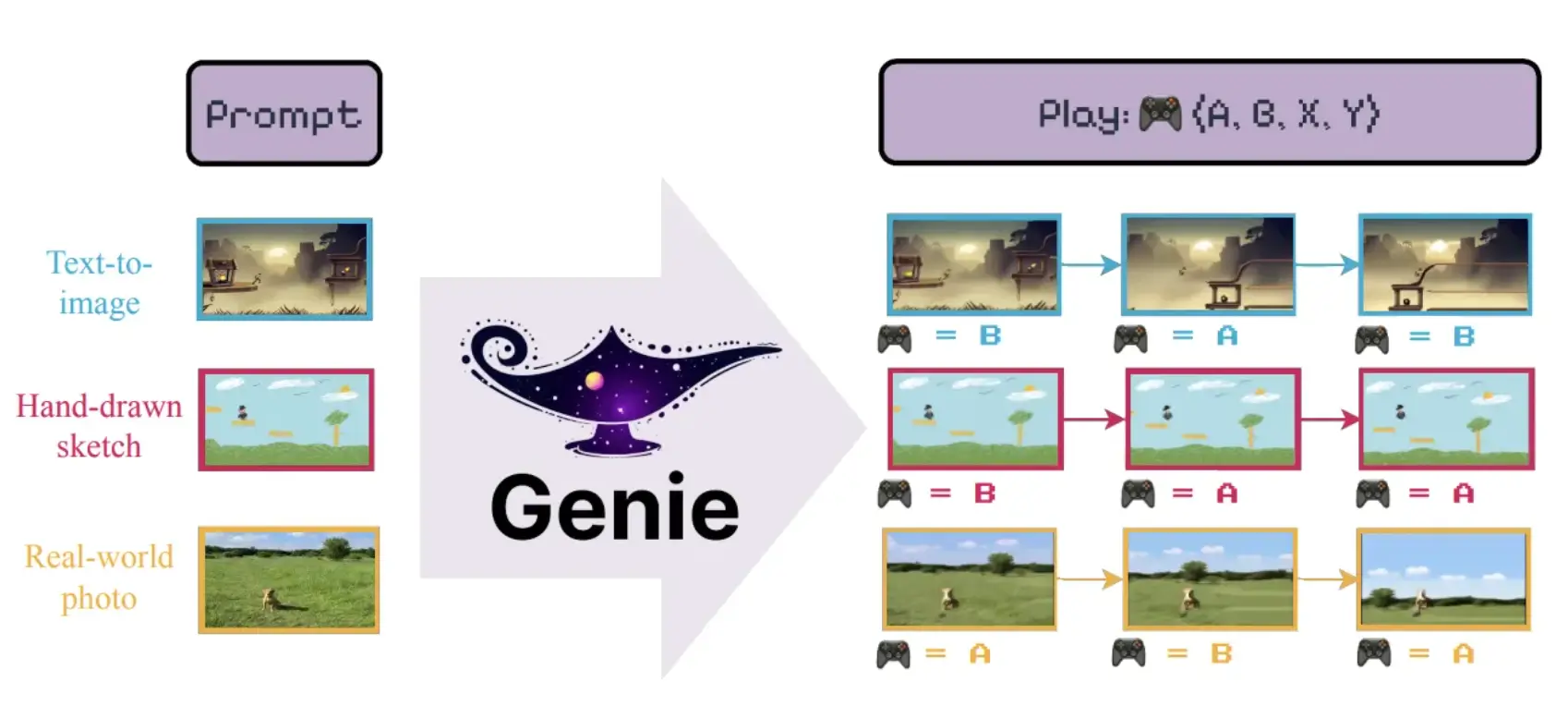

How Genie 3 Works Under the Hood

Key Capabilities at a Glance

| Capability | Detail | Practical Win |

|---|---|---|

| Resolution | 1280 × 720 @ 24 FPS | Smooth enough for rapid prototyping |

| Memory Window | ≈ 60 s world coherence | Sustains story beats and puzzles |

| Prompt Fusion | Natural-language steering mid-scene | Designers can iterate live |

| Domain Flexibility | Photorealistic, stylized, or abstract | Matches a studio’s visual identity |

| Physics Fidelity | Mostly realistic with artistic deviations | Ideal for imaginative level design |

Why It Matters to Different Industries

Game Studios

- Rapid “white-box” level exploration—minutes instead of weeks.

- Prompt crafting is turning into a design discipline as crucial as shader work.

Robotics & AI Research

- Physical test rigs are costly and fragile; Genie 3 offers a budget-friendly, risk-free sandbox for training control policies.

Brand Marketing & Events

- Spin up interactive product showcases or virtual pop-ups without a single line of GLSL.

Education & Training

- Emergency-response drills, historical walk-throughs, or lab safety lessons can be generated on demand.

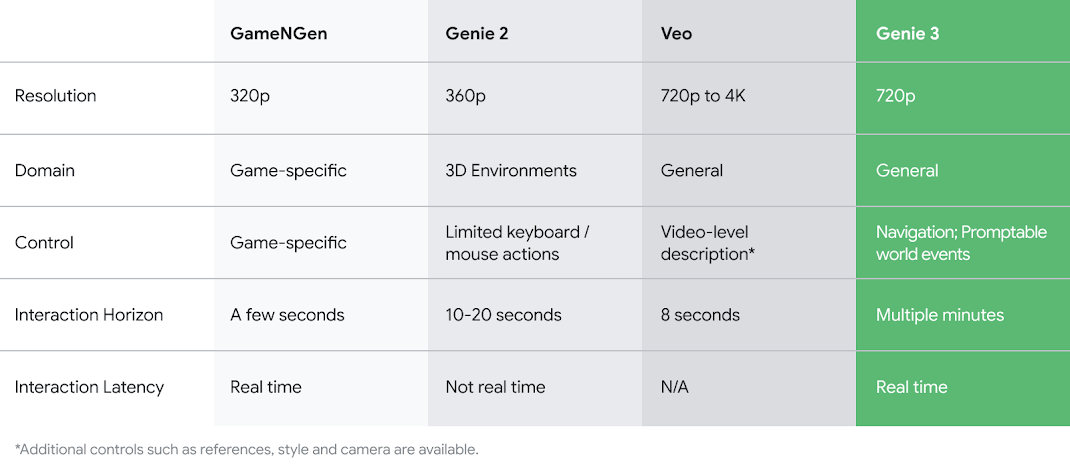

Competitive Snapshot (2025)

| System | Core Strength | What Sets Genie 3 Apart |

|---|---|---|

| NVIDIA ACE | Conversational NPC personalities | Genie produces the entire world, not just the character AI. |

| Runway GEN-3 | Cinematic post-production clips | Genie streams continuous, controllable gameplay footage. |

| Tencent Hunyuan-VR | Mesh-accurate VR environment export | Genie focuses on speed and improvisation over geometric precision. |

Current Limitations

- Coherence fades beyond multi-minute sessions—expect drifting textures or physics anomalies.

- Action vocabulary is still small (move, look, contextual motion).

- Cloud inference bills remain steep; local hardware isn’t viable—yet.

- Copyright-clean data sets are an active research area.

Roadmap Hints from DeepMind

- Hour-long stable simulations.

- Multi-agent social dynamics.

- Joint hardware-software optimizations to slash inference cost.

- Fully licensed or synthetic training corpora to minimize IP risk.

Action Items for You

Developers: Open a private branch, pipe Genie 3 output into your existing engine, and evaluate where generative iteration beats hand-crafted grayboxing.

Studios & Publishers: Budget for hybrid pipelines—use Genie for ideation, then migrate successful scenes into Unreal or Unity for final polish.

Enterprise Trainers: Begin with low-risk modules (warehouse layouts, onboarding tours) while monitoring advances in physics fidelity.

Investors: Watch for “middleware” plays—asset converters, data-sanitization services, and low-latency inference providers.

Policymakers: Draft guidelines on transparency, synthetic data, and environmental impact before fully generative worlds go mainstream.

Final Word

Genie 3 doesn’t just paint pretty pictures; it lets you step inside them, tweak the weather, and add new story beats before the last frame renders. Whether you build games, teach robots, or market sneakers, the ability to conjure interactive worlds from plain English is about to reshape your workflow. Experiment early, iterate often, and keep an eye on the roadmap—because today’s novelty is tomorrow’s production standard.